I have experienced difficulties in installing and configuring Hadoop so I want to make one easy guide for installation and configuration of Hadoop 1x. I am assuming that readers have a knowledge of basic Linux commands, hence, I am not going to explain those commands in detail.

I have used Hadoop-1.2.1, jdk-7, and Ubuntu(Linux) in our setup.

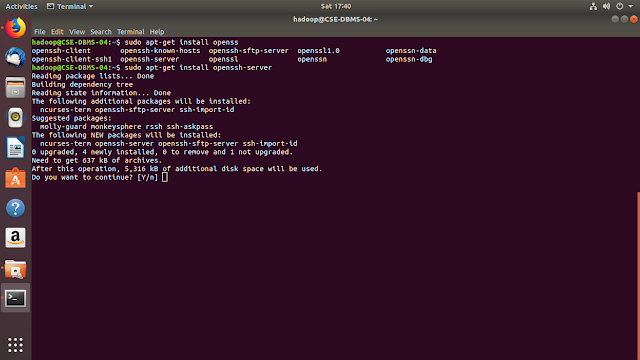

Install SSH:

- We require SSH for remote login to different machines for Map Reduce task to run on Hadoop cluster

Commands:

- sudo apt-get update

- updates list of packages

- sudo apt-get install openssh-server

- Installs OpenSSH Server

Generate Keys:

- Hadoop logged in to remote machines many times while running a MapReduce task. Therefore, we need to make a passwordless entry for Hadoop to all the nodes in our cluster.

Commands:

- ssh CSE-DBMS-04

- instead of CSE-DBMS-04, you should write your system's hostname or localhost. It asks for a password

- ssh-keygen

- generates SSH Keys

- ENTER FILE NAME:

- no need to write anything simply press enter as we want to use default settings

- ENTER PASSPHRASE:

- no need to write anything simply press enter as we want to use default settings

- cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

- Copy id_rsa.pub key to authorized keys to make a passwordless entry for the user

- ssh CSE-DBMS-04

- Now it should not ask for a password

Install Java

- I prefer offline Java installation. So I have already downloaded Java tarball and place it in my Downloads directory

Commands:

- sudo mkdir -p /usr/lib/jvm/

- create a directory for Java

- sudo tar -xvf ~/Downloads/jdk-7u67-linux-x64.tar.gz -C /usr/lib/jvm

- extract and copy the content of Java tarball to Java directory

- cd /usr/lib/jvm

- go to Java directory

- sudo ln -s jdk1.7.0_67 java-1.7.0-sun-amd64

- generate a symbolic link to jdk directory which will be used in Hadoop configuration

- sudo update-alternatives --config java

- checking and setting Java alternatives

- sudo nano $HOME/.bashrc

- setting the Java path. Add following two lines at the end of this file

- export JAVA_HOME="/usr/lib/jvm/jdk1.7.0_67"

- export PATH="$PATH:$JAVA_HOME/bin"

- setting the Java path. Add following two lines at the end of this file

- exec bash

- restarts bash(terminal)

- java

- it should not show "command not found!" error

Install Hadoop:

- First, we need to download the required tarball of Hadoop.

Commands:

- sudo mkdir -p /usr/local/hadoop/

- create Hadoop directory

- cd ~/Downloads

- sudo tar -xvf ~/Downloads/hadoop-1.2.1-bin.tar.gz

- sudo cp -r hadoop-1.2.1/* /usr/local/hadoop/

- extract and copy Hadoop files from tarball to the Hadoop directory

- sudo nano $HOME/.bashrc

- setting the Hadoop path. Add following lines at the end of this file

- export HADOOP_PREFIX=/usr/local/hadoop

- export PATH=$PATH:$HADOOP_PREFIX/bin

- setting the Hadoop path. Add following lines at the end of this file

- exec bash

- restarts bash(terminal)

- hadoop

- it should not show "command not found!" error

Configuration of Hadoop

- We are setting some environment variables and changing some configuration file according to our cluster setup.

Commands:

- cd /usr/local/hadoop/conf

- go to configuration directory of Hadoop

- sudo nano hadoop-env.sh

- open environment variable file and add following two lines at its respective place in file. They are already available in a file with some different values so keep it as it is and add this lines after it respectively

- export JAVA_HOME=/usr/lib/jvm/java-1.7.0-sun-amd64

- export HADOOP_OPTS=-Djava.net.preferIPv4Stack=true

- open environment variable file and add following two lines at its respective place in file. They are already available in a file with some different values so keep it as it is and add this lines after it respectively

- sudo nano core-site.xml

- open HDFS configuration file and set name server address and tmp dir value as shown in below figure. you have to use your hostname instead of "CSE-DBMS-04"

- fs.default.name hdfs://CSE-DBMS-04:10001

- hadoop.tmp.dir /usr/local/hadoop/tmp

- sudo nano mapred-site.xml

- Open Map Reduce configuration file and set job tracker value. you have to write your hostname instead of "CSE-DBMS-04"

- mapred.job.tracker CSE-DBMS-04:10002

- sudo mkdir /usr/local/hadoop/tmp

- create tmp directory to store all files on the data node

- sudo chown hadoop /usr/local/hadoop/tmp

- change owner of the directory to avoid access control issues. write your username instead of "hadoop"

- sudo chown hadoop /usr/local/hadoop

- change owner of the directory to avoid access control issues. write your username instead of "hadoop"

Format DFS (skip this step if you are going for Multi-Node setup):

- Now we are ready to format our Distributed File System (DFS)

Command:

- hadoop namenode -format

- Check for the message "namenode successfully formatted"

Start all process:

- We are ready to start our hadoop cluster (though only single node)

Commands:

- start-all.sh

- to start all (name-node, secondary name node, data node, job tracker, task tracker)

- jps

- to check whether all services (i.e. name node, secondary name node, data node, job tracker, task tracker) started or not

To check cluster details on web interface:

- Open any browser and got following address:

- http://CSE-DBMS-04:50070/dfshealth.jsp

- for DFS (name-node) details. Write your hostname instead of "CSE-DBMS-04"

- http://CSE-DBMS-04:50030/jobtracker.jsp

- for Map Reduce (job tracker) details. Write your hostname instead of "CSE-DBMS-04"

- http://CSE-DBMS-04:50070/dfshealth.jsp

Stop all processes:

- If you want to stop (shut down) all your Hadoop cluster services

Command:

- stop-all.sh

For any queries you can write in a comment or mail me at "brijeshbmehta@gmail.com"

Courtesy: Mr. Anand Kumar, NIT, Trichy

Originally posted on 14/12/2016 at https://brijeshbmehta.wordpress.com/2016/12/14/hadoop-1x-installation-and-configuration-on-single-node/

It was really a wonderful article and I was really impressed by reading this blog. We are giving all software Courses such as Data science, big data, hadoop, apache spark scala, python and many other course. hadoop training institute in bangalore is one of the reputed training institute in bangalore. They give professional and real time training for all students.

ReplyDeleteAll the best!

ReplyDelete